Identification: What is it?

Identification and identifiable are two commonly used words in Statistics and Economics. But, have you ever wondered, what does it really mean to say that a quantity is identifiable from the data? Statisticians seem to agree on a definition in the context of parametric models — calling a parameter identifiable if distinct values of the parameter correspond to distinct members of a parametric family. Intuitively, this means that if we had an infinite amount of data, we could learn the actual model parameters used to generate the data — it’s kinda neat!

So this definition makes sense for a parametric model, but what about nonparametric models? And what if you don’t have an explicit model that you want to use? For instance, in the context of an experiment or an observational study, we might want to ask whether the average treatment effect of an intervention is identifiable from the data. Unfortunately, the simple definition of parametric identifiability does not apply if we take a nonparametric or randomization-based approach.

This post will build on the parametric intuition to describe a more general notion of identifiability that works for all types of settings. Our goal is to explain the definition and provide examples of how to apply it in a range of different contexts. The next few posts in the series will dive deeper into the role of identification in the causal context.

A general notion of identifiability

Basic framework

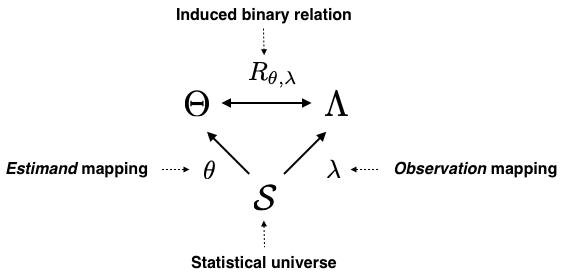

The identification framework we will consider consists of three elements:

- A statistical universe that contains all of the objects relevant to a given problem \(S\).

- An estimand mapping that describes what aspect of the statistical universe we are trying to learn about \(\theta : S \to \Theta \).

- An observation mapping that tells us what parts of our statistical universe we observe \( \lambda: S \to \Lambda \).

We then define identification by studying the inherent relationship between the estimand mapping and the observation mapping using the induced binary relation \(R\). Intuitively, the induced binary relation connects “what we know” to “what we are trying to learn” through the “statistical universe” in which we operate.

Binary relation interlude: If you are not familiar with binary relations, or it’s been a while since you’ve seen them, they are basically a generalization of a function. Mathematically, a binary relation \(R\) from a set \(\Theta\) to \(\Lambda\) is a subset of the cartesian product \(\Theta \times \Lambda\). For \(\vartheta \in \Theta\) and \(\ell \in \Lambda\), we say that \(\vartheta\) is \(R\)-related to \(\ell\) if \((\vartheta, \ell) \in R\) and write \(\vartheta R \ell\).

For example, let \(\Theta\) be the set of prime numbers, \(\Lambda\) be the set of integers, and \(R\) the “divides” relation such that \(\vartheta R \ell \) if \(\vartheta\) divides \(\ell\) (e.g., \(3 R 3\), \(3 R 6\), but 3 is not in relation with 2).

Definition of Identification

Specifying the three elements of the framework for a given problem gives rise a special binary relation.

- Definition (Induced binary relation)

- Consider \(\mathcal{S} \) and \(\theta, \lambda \in G(\mathcal{S} )\), where \(G(\mathcal{S} )\) is the set of all function with domain \(\mathcal{S}\) and let \(\Theta = Img(\theta)\) and \(\Lambda = Img(\lambda)\). We define the binary relation \(R_{\theta, \lambda}\) induced by \(\mathcal{S}, \theta, \lambda\) as the subset \(R_{\theta,\lambda} = \{(\theta(S), \lambda(S)), S \in \mathcal{S}\} \subseteq \Theta \times \Lambda\).

With this in place, we say that \(\theta\), the estimand mapping, is identifiable from \(\lambda\) if the induced binary relation \(R\) is injective. In words, if there is a 1-1 relationship between what we are trying to estimate and what we observe, then the estimand mapping is identifiable from the observation mapping. This can be formalized mathematically as follows:

- Definition (Identifiability)

- Consider \(\mathcal{S}, \lambda, \theta\) and \(\Theta, \Lambda\) as in

the previous definition. For a given \(\ell_0 \in \Lambda\), let

\(\mathcal(S)(\ell_0) = \{S \in \mathcal{S}: \lambda(S) = \ell_0\}\). The

function \(\theta\) is said to be \(R_{\theta,\lambda}\)-identifiable at

\(\ell_0 \in \Lambda\) if there exists \(\vartheta_0 \in \Theta\), such

that, for all \(S \in \mathcal{S}(\ell_0)\), we have that

\(\theta(S)=\vartheta_{0}\).

The function \(\theta\) is said to be \(R_{\theta,\lambda}\)-identifiable everywhere from \(\lambda\) if it is \(R_{\theta,\lambda}\)-identifiable at \(\ell\) for all \(\ell \in \Lambda\). We will usually simply say that \(\theta\) is identifiable.

This definition might seem really abstract, but it’s exactly the intuitive definition we gave in the introduction. It says that if the part \(\mathcal{S}(\ell_0)\) of the statistical universe \(\mathcal{S}\) that is coherent with the observed data \(\ell_0\) uniquely corresponds to a single estimand of interest — i.e. \(\theta(S) = \vartheta_0, \forall S \in \mathcal{S}(\ell_0)\) — then that estimand is identifiable!

Examples

Below we describe how the above definition applies to parametric and nonparametric models as well as finite population settings.

Parametric models

Consider a parametric model where \(P(\vartheta)\) is a distribution indexed by \(\vartheta \in \Theta\).

The statistical universe is then \(S = {(P(\vartheta),\vartheta): \vartheta\in \Theta })\). Notice that it contains both the model and the parameters that determine the model.

The estimand mapping is \(\theta(S) = \vartheta\). This defines the quantity of interest: in the simplest case, it is just the parameters of the model but it can also be any function or subset of the parameters — i.e. \(\theta(S) = \phi(\vartheta)\).

The observation mapping is \( \lambda(S) = P(\vartheta) \). This is simply the model for a given parameter.

Remember, identification is about understanding the limits of our estimation with an infinite amount of data, and so the observations correspond exactly to the full distribution. If \(\phi(\vartheta) = \vartheta\), the induced binary relation \(R_{\theta,\lambda}\) reduces to a simple function \(R_{\theta,\lambda}: \vartheta \rightarrow P(\vartheta)\) and the abstract definition we gave above is exactly equivalent to the textbook definition of identification for parametric statistical models.

- Linear Regression Example

- Consider a \(p\)-dimensional random vector \(X \sim P(X)\) for some

distribution \(P_X\).

Let \(P(Y \mid X; \beta,\sigma^2) = \mathcal{N}(X^t\beta, \sigma^2)\), where

\(\mathcal{N}(\mu,\sigma^2)\) is the normal distribution with mean \(\mu\)

and variance \(\sigma^2\), and let \(P_{\beta,\sigma^2}(X,Y) = P(Y \mid X;

\beta, \sigma^2) P(X)\).

Example question: Are the regression parameters \((\beta\) and \(\sigma^2)\) identifiable?

Identification setup: We can study the identifiability of the parameter \(\vartheta = (\beta, \sigma^2)\) from the joint distribution \(P_{\vartheta}(X,Y)\) using our framework, by letting \(\mathcal{S} = \{(P_{\vartheta}, \vartheta), \vartheta \in \Theta\}\), where \(\Theta = \mathbb{R} \times \mathbb{R}^{+}\), \(\lambda(S) = P_{\vartheta}\), and \(\theta(S) = \vartheta\). In this case, the induced binary relation \(R_{\theta,\lambda}\) is reduces to a function and so \(\vartheta\) is identifiable iff the function \(R_{\theta,\lambda}\) is injective. It is easy to verify that this is the case if and only if the matrix \(E[X^tX]\) has full rank. If in contrast, we take \(\theta’(S) = \beta\), then the induced binary relation \(R_{\theta’, \lambda}\) is no longer a function, but our general definition of identifiability still applies.

Nonparametric identification

Applying the mathematical definition of identification to nonparametric models is relatively straight forward.

- Missing Data Example

- If \(Y\) is a random variable representing a response of interest, let

\(Z\) be a missing data indicator that is equal to \(1\) if the response

\(Y\) is observed, and \(0\) otherwise; that is, the data we actually observe

is drawn from \(P(Y \mid Z=1)\).

Example question: Is the distribution of the missing outcomes \(P(Y \mid Z=0)\) identifiable from that of the observed outcomes \(P(Y \mid Z=1)\) combined with that of the missing data indicator \(P(Z)\)?

Identification setup: Let \(\mathcal{S}\) be a family of joint distributions for \(Z\) and \(Y\), and define \(\lambda(S) = (P(Y \mid Z=1), P(Z))\), and \(\theta(S) = P(Y \mid Z=0)\). The question can be answered by studying the injectivity of the induced mapping \(R_{\theta, \lambda}\). We let that question unanswered for now, but will revisit it in a subsequent installment of this series.

Notice that the same definition worked in both nonparametric and parametric models without having to be adjusted or tweaked.

Finite population identification

In the previous two sections, we assumed an infinite amount of data—that is the usual starting point for identification. But what do you do if you plan to take a finite population perspective? Clearly, the assumption that you have an infinite amount of data doesn’t make sense! It turns out that we can again use the mathematical definition of identification by defining the appropriate statistical universe, estimand mapping, and observation mapping.

- Causal Inference Example

- With \(N\) units, let each unit be assigned to one of two treatment interventions, \(Z_i =1\) for treatment and \(Z_i=0\) for control. Under the stable unit treatment value assumption each unit \(i\) has two potential outcomes \(Y_i(1)\) and \(Y_i(0)\), corresponding to the outcome of unit \(i\) under treatment and control, respectively. For each unit \(i\), the observed outcome is \(Y^{\ast}_i = Y_i(Z) = Y_i(1) Z_i + Y_i(0) (1-Z_i)\). Let \(Y(1) = {Y_1(1), \ldots, Y_N(1)}\) and \(Y(0) = {Y_1(0), \ldots, Y_N(0)}\) be the vectors of potential outcomes and \(Y = (Y(1), Y(0))\).

Example question: Is \(\tau(Y) = \overline{Y(1)} - \overline{Y(0)}\) identifiable from the observed data \((Y^\ast, Z)\).

Identification setup: Let \(\mathcal{S}_Y = \mathbb{R}^N \times \mathbb{R}^N\) be the set of all possible values for \(Y\), \(\mathcal{S}_Z = \{0,1\}^N\) be the set of all possible values for \(Z\), and \(\mathcal{S} = \mathcal{S}_Y \times \mathcal{S}_Z\).

Take \(\theta(S) = \tau(Y)\) and \(\lambda(S) = (Y^\ast, Z)\) as the estimand and observation mapping, respectively. The question is then answerable by studying the injectivity of the induced binary relation \(R_{\theta, \lambda}\).

Once again, we will revisit this example in more details in a future post — our goal here is simply to show how identification questions can be formulated in a unified fashion within a simple framework.

Further reading

We dive deeper into some of the formalism behind identification in a recent paper. A lot of the pioneering work on identifiability was done by the economist Charles Manski: see for instance his 2009 monograph ‘Identification for prediction and decisions’ for a very accessible introduction. For a recent in-depth survey of the area, see Lewbel 2019: ‘The identification Zoo’. Finally, as mentioned in the introduction, this is the first installment in a series dedicated to identifiability — stay tuned for the next installments!

References

Lewbel, A. (2019). The identification zoo: Meanings of identification in econometrics Journal of Economic Literature.

Manski, C. (2009) Identification for prediction and decisions. Harvard University Press.